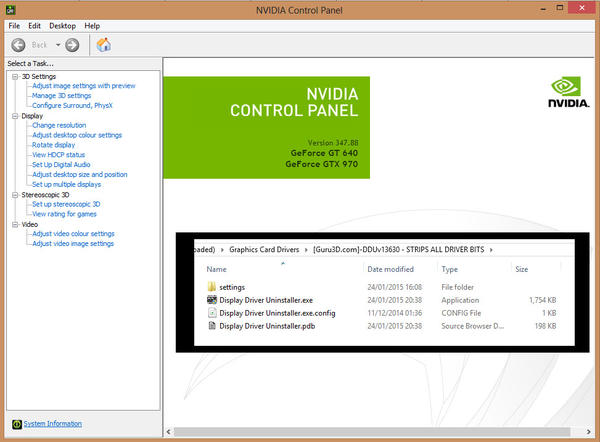

20:32:20.461 Iray - IRAY:RENDER :: 1.0 IRAY rend info : Rendering. 20:32:20.461 Iray - IRAY:RENDER :: 1.0 IRAY rend info : Rendering with 0 device(s): \.\.\.\.\src\pluginsource\DzIrayRender\dzneuraymgr.cpp(353): Iray - IRAY:RENDER :: 1.0 IRAY rend error: No device specified or usable Upon checking the process ids of the workers for each request - I can also confirm that all the workers are getting the requests in parallel - but those are not able to perform the GPU processings in parallel at the same time.Īny guidance on what can be the bottleneck here will be very helpful.20:32:20.457 WARNING. I initially thought CPU or IO processes are the bottlenecks in the app - but upon intensively logging the time taken at each step, I found the bottleneck is coming from the GPU processing ( model.forward starts taking 2x-3x times). Using 3x workers is enabling me to process 3 requests in parallel but the overall processing time of all those requests is also becoming 3x - hence no improvement in real terms. ( model.forward takes 41.81s, 3x of a single request, every other step taking a similar time). Total Time To Process 15 Requests By A Client: 43.82s ( model.forward takes 28.34s, 2x of a single request, every other step taking a similar time) Experiment 3: gunicorn main.app:app -b 0.0.0.0:8000 -workers 3Ĭoncurrent Requests: 3 (3 clients sending requests in parallel) Total Time To Process 15 Requests By A Client: 29.35s ( model.forward takes 14.98s) Experiment 2: gunicorn main.app:app -b 0.0.0.0:8000 -workers 2Ĭoncurrent Requests: 2 (2 clients sending requests in parallel)

Total Time To Process 15 Requests By A Client: 15.87s * I have added a timing logger for every step of the application for checkingĮxperiment 1: gunicorn main.app:app -b 0.0.0.0:8000 -workers 1 * Only `model.forward` part runs on GPU - the rest of the steps run on CPU in the application. I am trying to deploy a Pytorch image classification model wrapped in Flask on g4dn.xlarge (4 vCPU, 16GB RAM, T4 GPU with 16GB Memory) instances on AWS.įor selecting the optimal number of workers I performed some experiments: Note:

0 kommentar(er)

0 kommentar(er)